Abstract

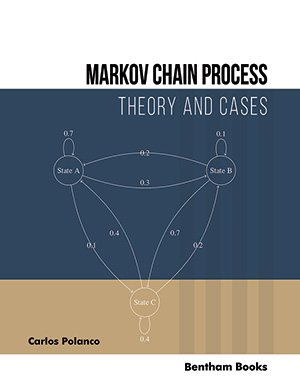

In this chapter, and from the historical introduction raised in the previous chapters, we introduce and exemplify all the components of a Markov Chain

Process such as: initial state vector, Markov property (or Markov property), matrix of transition probabilities, and steady-state vector. A Markov Chain Process

is formally defined and by way of categorization this process is divided into two

types: Discrete-Time Markov Chain Process and Continuous-Time Markov Chain

Process, which occurs as a result of observing whether the time between states in a

random walk is discrete or continuous. Each of its components is exemplified, and

analytically all the examples are solved.

Keywords: Continuous-Time Markov Chain Process, Discrete-Time Markov Chain Process, Ergodic Markov Chain Process, Initial State Vector, Markov Property, Matrix of Transition Probabilities, Regular Matrix, States, Steady-State Vector, Stochastic Matrix, Transition Probabilities